November 25th, 2013

I Think I’ve Seen This One Before: Learning to Identify Disease

Paul Bergl, M.D.

Nothing puts more fear into the heart of an internist than a dermatologic chief complaint. And for good reason: we have very little exposure to the breadth of the field. To us, all rashes seem to be maculopapular, all bumps are pustules… or was that nodules?

Nothing puts more fear into the heart of an internist than a dermatologic chief complaint. And for good reason: we have very little exposure to the breadth of the field. To us, all rashes seem to be maculopapular, all bumps are pustules… or was that nodules?

It’s not that we internists don’t care about the skin or don’t appreciate its complexity. Rather, we simply haven’t seen enough bumps, rashes, and red spots to sort them all out consistently.

On the topic of pattern recognition in medicine, an oddly titled NEJM Journal Watch piece Quacking Ducks grabbed my attention recently. The commentary by Mark Dahl summarizes a J Invest Dermatol article by Wazaefi et al. that discusses the pattern identification and other cognitive processes involved in discerning suspicious nevi. I will try to distill the interesting discussion to the main points of Dr. Dahl’s summary and the index article:

- Experienced dermatologists use other cognitive processes besides the “ABCD” method for finding suspicious nevi.

- Most healthy adults have only two or three dominant patterns of nevi on their bodies.

- Deviations from the patient’s own pattern usually represent suspicious nevi. These deviations are referred to as “ugly ducklings.”

- Even untrained practitioners can cluster nevi based on patterns and can identify which nevi deviate from the patterns.

- However, expert skin examiners tend to cluster nevi more reliably and into a smaller number of groups.

- Identifying potential melanomas by seeking out “ugly duckling” nevi is both an exceedingly simple and cognitively complex means of finding cancer.

So, what is the take-home point? To make diagnoses, dermatologists use their visual perception skills, some of which are innate and some of which are honed through practice. While technology threatens to overtake the task of perception — see the MelaFind device, for example — human perceptiveness is still difficult to qualify, quantify, and teach.

A colleague of mine and a faculty radiologist at my institution, David Schacht, has pondered the very question of visual perceptiveness among trainees in his own specialty of mammography. As your probably realize, computer-aided diagnosis has risen to prominence as a way to improve radiologists’ detection of subtle suspicious findings on mammograms. These computerized algorithms lessen the chance of false-negative tests. However, a radiologist ultimately still interprets the study; as such, radiologists still need training in visual perception. But how does a radiologist acquire this “skill”? Dr. Schacht hypothesizes that radiology residents who review a large series of enriched mammograms will have better cancer-detection rates. In other words, he hopes that intensive and directed case review will improve visual perception.

Clearly, mammographers and dermatologists are not alone in making diagnoses by what they see. Every field relies on some degree of astute observation that often becomes second nature over time. Even something as simple as the general appearance of a patient in the emergency room holds a trove of clues.

My question is, can these perceptive abilities be better taught to trainees or even be programmed into a computer? Or should we simply assume that experience itself drives some otherwise unexplained improvement in visual diagnosis?

If the former is true, then we ought to seek a better understanding of how physicians glean these skills. If man should stay ahead of machine, then we clinicians should hone our intuition and our abilities to recognize visual patterns. Moreover, we should design education systems that promote more visual engagement and activate the cortical pathways that underpin perceptiveness.

On the other hand, if experience itself imbues clinicians with better perceptive skills, then we really ought to maximize the number of clinical exposures for our trainees. No matter what the field, students and residents might simply need to see a lot more cases, either simulated or real.

As human or computer perceptiveness evolves, even the most expert eyes or finest computer algorithms will still be limited . And ultimately, any homely duckling of a nevus probably deserves a biopsy. But with biopsies, aren’t we trading one set of expert eyes for another — in this case, the pathologist — when we send that specimen to the lab?

In the end, the prevailing message seems to be that repeated experiences breed keen and reliable observations. We cannot discount the very basic human skills of visual cues. We should continue to seek ways to refine, study, and computerize our own perceptiveness.

November 14th, 2013

The Google Generation Goes to Med School: Medical Education in 2033

Paul Bergl, M.D.

This past week, I attended the annual AAMC meeting where the question, “What will medical education look like in 2033?” was asked in a session called “Lightyears Beyond Flexner.” This session included a contest that Eastern Virginia Medical School won by producing a breath-taking and accurate portrayal of 2033. I encourage you to view the excellent video above that captures the essence of where academic medicine is headed.

After this thought-provoking session, I too pondered academic medicine’s fate. I would like to share my reflections in this forum.

Without question, technology stood out as a major theme in this conference. And for good reason: clearly it is already permeating every corner of our academic medical lives. But as technology outpaces our clinical and educational methods, how exactly will it affect our practices in providing care and in training physicians?

Our educational systems will evolve in ways we cannot predict. But in reality, the future is already here as transformations are already afoot. MOOCs — massive open online course for the uninitiated — like Coursera are already providing higher education to the masses and undoubtedly will supplant lectures in med schools and residencies. In a “flipped classroom” era, MOOCs will empower world renowned faculty to teach large audiences. Meanwhile, local faculty can mentor trainees and model behaviors and skills for learners. Dr. Shannon Martin, a junior faculty at my institution, has proposed the notion of a “flipped rounds” in the clinical training environment, too. In this model, rounds include clinical work and informed discussions; reading articles as a group or having a “chalk talk” are left out of the mix. In addition, medical education will entail sophisticated computer animations, interactive computer games for the basic sciences, and highly intelligent simulations. Finally, the undergraduate and graduate curricula will have more intense training in the social sciences and human interaction. In a globalized and technologized world, these skills will be at a premium.

But why stop at flipped classrooms or even flipped rounds? Flipped clinical experiences are coming soon too.

Yes, technology will revolutionize the clinical experience as well. Nowadays, we are using computers mainly to document clinical encounters and to retrieve electronic resources. In the future, patients will enter the exam room with a highly individualized health plan generated by a computer. A computer algorithm will review the patient’s major history, habits, risk factors, family history, biometrics, previous lab data, genomics, and pharmacogenomic data and will synthesize a prioritized agenda of health needs and recommended interventions. Providers will feel liberated from automated alerts and checklists and will have more time to simply talk to their patients. After the patient leaves the clinic, physicians will then stay connected with patients through social networking and e-visits. Physicians will even receive feedback on their patient’s lives through algorithms that will process each patient’s data trail: how often they are picking up prescriptions, how frequently they are taking a walk, how many times they buy cigarettes in a month. And of course, computers will probably even make diagnoses some day, as IBM’s Watson or the Isabel app aspire to do.

Yet even if Watson or Isabel succeeds in skilled clinical diagnosis, these technologies will not render physicians obsolete. No matter how much we digitize our clinical and educational experiences, humans will still crave the contact of other humans. We might someday completely trust a computer to diagnose breast cancer for us, but would anyone want a computer to break the bad news to our families? Surgical robots might someday drive themselves, but will experienced surgeons and patients accede ultimate surgical authority to a machine? A computer program might automatically track our caloric intake and physical activities, but nothing will replace a motivating human coach.

With all of these changes, faculty will presumably find time for our oft-neglected values. Bedside teaching will experience a renaissance and will focus on skilled communication. Because the Google Generation of residents and students will hold all of the world’s knowledge in the palm of their hands, they will look to faculty to be expert role models. Our medical educators will be able to create a truly streamlined, ultra-efficient learning experience that allows more face-to-face experiences with patients and trainees alike.

So where is academic medicine headed beyond Flexner? Academic physicians will remain master artists, compassionate advisers, and a human face for the increasingly digitized medical experience.

November 7th, 2013

Is the Overwhelming “Primary Care To-Do List” Driving Talented Residents Away?

Paul Bergl, M.D.

Is there really another guideline-based preventive measure to discuss today?

In my 3 years of residency, the nearly universal resident response to outpatient continuity clinic was a disturbing, guttural groan. I recognize that many aspects of primary care drag down even the most enduring physicians. But I have also found primary care — particularly with a panel of high-risk and complex patients — to be a welcome challenge. I recently spoke with one of my institution’s main advocates for academic primary care, who I know has wrestled with this standard resident reaction.

And we had a shared epiphany about the one of the main deterrent driving promising residents away from primary care: inadequate training in prioritizing outpatient problems.

It’s easy to see how primary care quickly overwhelms the inexperienced provider. An astonishing number of recommendations, guidelines, screenings, and vaccinations must compete with the patient’s own concerns and questions. This competition creates an immense internal tension for the resident who knows the patient’s needs — cardiovascular risk reduction, cancer screenings, etc. – but is faced with addressing competing problems.

At my institution, the resident continuity clinic also houses a generally sicker subset of patients. Take these complex patients and put them in the room with a thoughtful resident that suffers from a hyper-responsibility syndrome, and you get the perfect mix of frustration and exhaustion.

There are diverse external pressures that also conspire to make the trainee feel like he should do it all: the attending physician’s judgment, government-measured quality metrics, and expert-written guidelines for care.

In this environment of intense internal and external pressures, how can we give residents appropriate perspective in a primary care clinic?

I would argue that residency training needs to include explicit instruction on how to prioritize competing needs. Maybe residents intuitively prioritize health problems already, but I’ve witnessed enough of my residents’ frustrations with primary care and preventive health. Let’s face it: there is probably a lot more value in a colonoscopy than an updated Tdap, but we don’t always emphasize this teaching point.

At times, perceptions of relative value can be skewed. “Every woman must get an annual mammogram” is a message hammered into the minds of health professionals and lay people. Yet counseling on tobacco abuse might give the provider and patient a lot more bang for the buck. Our medical education systems do not emphasize this concept very well. Knowing statistics like the number needed to treat (NNT) might be a rough guide for the overwhelmed internist, but the NNT does take into account the time invested in clinic to arrange for preventive care or the cost of the intervention.

Worse yet, there are simply too many recommendations and guidelines these days. Sure these guidelines are often graded or scored. But as Allan Brett recently pointed out in Journal Watch, many guidelines conflict with other societies’ recommendations or have inappropriately strong recommendations. Residents and experienced but busy PCPs alike are in no position to sift through this mess.

Our experts in evidence-based medicine need to guide us toward the most relevant and pressing needs, guidelines about guidelines, so to speak. We need our educational and policy leaders to help reign in the proliferation of practice guidelines rather than continuing to disseminate them.

Physicians in training have their eyes toward the potential prospects of pay-for-performance reimbursements and public reporting of physicians’ quality scores. No one wants to enter a career in which primary care providers are held accountable for an impossibly large swath of “guideline-based” practices.

So how might we empower our next generation of physicians to feel OK with simply leaving some guidelines unfollowed? In my experience, our clinician-educators contribute to the problem with suggestions like, “You know, there are guidelines recommending screening for OSA in all diabetics.” Campaigns like “Choosing Wisely” represent new forays into educating physicians on how to demonstrate restraint, but they do not help physicians put problems in perspective. Our payors don’t seem to have any mechanism to reward restraint or prioritization and can, in fact, skew our priorities further.

I hope that teaching relative value and the art of prioritizing problems will be a first and critical step toward getting the next generation of physicians excited about primary care.

October 3rd, 2013

Choosing Words Wisely

Paul Bergl, M.D.

“What do you think, Doctor?”

For a novice physician, these worlds can quickly jolt a relatively straightforward conversation into a jumble of partially formed thoughts, suppositions, jargon, and (sometimes) incoherent ramblings. Even for simpler questions, the fumbling trainee does not have a convenient script that has been refined through years of recitation. Thus, many conversations that residents have with patients are truly occurring for the first time. And unfortunately, this novelty can result in poorly chosen words that can have lasting effects. An inauspicious slip of the tongue could significantly alter the patient’s perceptions and decisions.

A recent study in JAMA Internal Medicine highlighted the connection between the words we choose and the actions our patients take. As Dr. Andrew Kaunitz reported in a summary of this article for NEJM Journal Watch, avoiding the word “cancer” in describing hypothetical cases of DCIS resulted in women choosing less aggressive measures and avoiding procedures like surgical resection. Dr. Kaunitz notes that the National Cancer Institute has also recommended against labeling DCIS as “cancer.”

I believe this recent study raises a major question at every level of medical education, particularly during residency. How do we counsel our physicians to counsel patients and communicate effectively? How do we choose words that appropriately convey the urgency of a diagnosis without scaring our patients into unnecessarily risky treatments?

I was fortunate to have gone to a medical school where our course directors were forward-thinking. Our preclinical curriculum included mentored sessions with practicing physicians and patient-actors. We were taught how to use motivational interviewing to facilitate smoking cessation, how to ask about a patient’s sexual orientation and gender identification, and even how to use the SPIKES mnemonic for breaking bad news.

Yet all of these simulations can never really prepare a trainee for the first time he or she is on the spot in the role of a real physician with a real patient. Counseling patients requires subtleties; no medical school curriculum could possibly address every situation. But as the above-referenced study by Omer at al. confirms, subtleties and shades of meaning matter.

Yet all of these simulations can never really prepare a trainee for the first time he or she is on the spot in the role of a real physician with a real patient. Counseling patients requires subtleties; no medical school curriculum could possibly address every situation. But as the above-referenced study by Omer at al. confirms, subtleties and shades of meaning matter.

Of course, not every situation that requires measured words will be so dramatic. The words we choose for more benign situations matter, too. How do I define a patient’s systolic blood pressure that always runs in the 130-139 mm Hg range? Do I tell him that he has “prehypertension,” or do I give him a pass in saying, “Your blood pressure runs a little higher than normal”? Would this patient exercise more if I choose “prehypertension”?

What do I say to my patient with a positive HPV on reflex testing of an abnormal Pap smear? “Your Pap smear shows an abnormality” or “an infection with a very common virus…” or “suspicious cells” or “an infection that might lead to cancer someday…” What effect will it have on how she approaches future abnormal results? How might she change her sexual habits? How might she view her partner or partners?

These days, med schools and residency programs are being increasingly taxed by the competing priorities in educating the 21st-century physician. As technology rapidly threatens to usurp our expertise in many domains of practice, communication will remain a cornerstone of our job. Communicating with patients will never be passe; no patient wants to be counseled by a computer. We must master these vital communication skills.

Unfortunately, studies like Omer et al. only touch the tip of the iceberg. How do we empower patients with information while not scaring them? When should we scare them with harsher language? How and where do we learn to choose the right words? And who decides?

Even a perfectly designed training system could not tackle all these questions. But perhaps we ought to be exposing our trainees to simulated conversations in which subtle word choices matter. Let’s face it: We could all do better at choosing our words wisely.

September 27th, 2013

Duty Hour Reform Revisited

Akhil Narang, M.D.

Discussions of resident duty hour reforms reached the point of ad nauseam a few years ago. Everyone had their say – Program Directors (“In 2003 we instituted an 80-hour work week, in 2011 we switched to 16 hour shifts, what’s next – online residencies!?”), senior residents (“What? I have to write H&Ps again? I don’t even know my computer password!”), interns (“I thought I was done with cross-covering after this year”), graduating medical students (“I get to sleep in MY bed most of next year!”), and various supervising bodies (“This is what the public wants. Of course there is evidence that these reforms will work.”). Now it’s my turn: part of the last class to have experienced 30-hour call cycles as interns – the way it should/shouldn’t be (depending on your bias).

Discussions of resident duty hour reforms reached the point of ad nauseam a few years ago. Everyone had their say – Program Directors (“In 2003 we instituted an 80-hour work week, in 2011 we switched to 16 hour shifts, what’s next – online residencies!?”), senior residents (“What? I have to write H&Ps again? I don’t even know my computer password!”), interns (“I thought I was done with cross-covering after this year”), graduating medical students (“I get to sleep in MY bed most of next year!”), and various supervising bodies (“This is what the public wants. Of course there is evidence that these reforms will work.”). Now it’s my turn: part of the last class to have experienced 30-hour call cycles as interns – the way it should/shouldn’t be (depending on your bias).

While lamenting to my Program Director during residency on how my class not only had a difficult intern year but also had to assume “intern responsibilities” during my junior and senior years, he gently reminded me of his experience as an intern. It was routine for him to care for more than 20 patients on the general medicine service. Moreover, the ICU was “open” and any of his patients transferred to the unit continued to be under his care. Generously assuming 1 day off in 7, he worked more 100-hour work weeks than he’d care to remember.

As a junior resident, I was on service with my Chair of Medicine and he repeated many of the same stories of busy services and how the word housestaff came to be – the residents’ de facto house was the hospital. Was this dangerous? The unfortunate case of Libby Zion (and others) would suggest yes. Did my attendings became outstanding physicians, in part because of the rigorous training? Unequivocally.

Fast forward a few decades: for numerous reasons, including public pressure, an 80-hour work weeks with a maximum of 30 consecutive hours in-house (for a resident) and 16 consecutive hours (for an intern) is the new standard. In a matter of 16 hours, only so much can be accomplished. The work-up, diagnosis, and response to treatment is hardly appreciated in this short time span. The resident, who is permitted to stay in-house for 30 hours, often completes what the intern didn’t have time to do and benefits from observing in real-time the clinical course of the patient. Is this a disservice to the intern? Many would argue “yes.”

Interns now leave work after a maximum of 16 hours. The time away from the hospital is supposed to allow for a better-work life balance, enable restorative sleep, and prevent medical mistakes. A study by Kranzler and colleagues showed that this wasn’t the case. Interns did not report an increase in well-being, a decrease in depressive symptoms, more sleep, or fewer mistakes than previously.

What about patient care/outcomes? While early data from the 16-hour work day is still forthcoming, we do have recent data from the 2003 rule that capped the work week at a maximum of 80 hours. In a study published in August 2013, Volpp and colleagues examined mortality pre- and post-80-hour work weeks. More than 13 million Medicare patients (admitted to short-term, acute-care hospitals) who had primary medical diagnoses of acute MI, CHF, or GI bleed, or surgical diagnosis in general, orthopaedic, or vascular surgery were included in the study. The authors concluded that no mortality benefit was present in the early years after the 80-hour work week was implemented and a just a trend toward improved mortality was observed in years 4-5. We will start to see mortality data from the 16-hour rule in a few years, but I suspect that no significant improvements will occur in patient outcomes. In fact, medical knowledge and hands-on experience for interns might suffer.

Completing internship used to be a rite of passage, akin to pledging a fraternity. The duty hour changes have allowed for interns to spend more time away from the hospital so that, theoretically, they are less tired and make fewer mistakes at work. In practice, this might not be the case. Unquestionably, the brutal hours that generations of past trainees faced was suboptimal. but it appears as if the current duty hour rules also might be less than ideal from a learning perspective. Hopefully, in the coming years, the ACGME will reevaluate its policies in light of the data they will see.

September 16th, 2013

Medical Interns – Not at the Bedside, but Not to Be Blamed

Paul Bergl, M.D.

If only all interns looked so happy on the job…

This past week in NEJM Journal Watch General Medicine, Abigail Zuger reviewed an article from the Journal of General Internal Medicine by Lauren Block et al. in which researchers examined how medical interns spend their time. The results from this time motion study might be concerning but are not unexpected. The investigators found that interns on inpatient rotations spend only 12% of their time in direct patient care and spend only 8 minutes daily with each patient on their inpatient services. Dr. Zuger notes this “distressing paucity” of direct patient care should cause leaders in graduate medical training to effect change in interns’ daily routines.

To those of us in training or just out of training, the reasons for less direct patient care are myriad and obvious:

- A focus on multidisciplinary care and ever-increasing specialization results in each medical patient having a dozen or more physicians, consultants, nurses, pharmacists, case managers, social workers, and therapists directly involved in care. At this core of this legion of providers stands the intern. This novice physician must field messages, pages, and advice from all arms of the treatment team. As such, the intern spends as much time coordinating care as he or she spends relaying messages and answering “quick questions” — which are never quick and rarely are questions.

- Patient acuity in academic centers also continues to rise. Patients on medical wards often are admitted with multiple comorbidities and in a state of disarray. Rare are the relatively straightforward admissions for uncomplicated pneumonia or CHF exacerbation. Thus, interns have to manage a number of active conditions, complex medication lists, and a barrage of patient data.

- In addition, the Centers for Medicare and Medicaid pay us by DRGs and have also inculcated us to prevent 30-day readmissions. CMS will soon ask us to admit more patients to observation status. How do all of these shifts in payment affect a house officer? The intern has to spend all the more time ensuring safe and timely discharges. Tasks like medication reconciliations and communicating with outpatient providers suck up even more of the intern’s day at the expense of face time with the patient.

- Finally, documentation steals many a precious minute from the day. The considerations of patient acuity and reimbursement add to the burden of documentation leading to bloated notes that take far too much time to construct.

I am probably too young to be so cynical, but I do not see a shift in these routines occurring any time soon. And without be excessively cantankerous, I feel obligated to ask, “Does the percentage of interns’ time spent in direct patient care matter?”

An smaller percentage of interns’ time spent directly interfacing with patients may not mean that patients get worse care. We don’t have any direct data that the distressing paucity of direct patient care is resulting in poor outcomes. Moreover, the very “non-patient” tasks outlined above are entirely necessary in today’s inpatient environment. For example, if a patient is started on a LMWH bridge to warfarin in the hospital, figuring out how the LMWH will be paid for and who will follow the INR post-hospitalization is as important as time spent at the patient’s bedside.

Of course, I am not suggesting that intern work is inherently rewarding or educational. Most of us embark on this career path because we value interaction with actual human beings, not because we like electronic note templates. I myself romance about the days when internists actually took the time to perform thorough histories and physicals. But if we don’t encumber the interns with all of this work, who will do it?

September 11th, 2013

Oral Anticoagulation, Part I: Direct Thrombin Inhibitors

Akhil Narang, M.D.

When I started residency 4 years ago, warfarin was really the only choice of anticoagulation widely used for prevention of stroke in patients with atrial fibrillation (AF) and in patients with venous thromboembolism (VTE). Despite knowing about the coagulation cascade for decades, only recently have viable alternatives to warfarin become available. In this post, I hope to break down (at a macroscopic level) use of oral direct thrombin inhibitors (DTI). In my next post, I will review Factor Xa inhibitors.

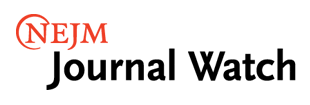

To start, let’s review the basics of clotting (Figure below). Fibrin clots form after activation of the intrinsic and extrinsic pathways. Tissue injury initiates the intrinsic pathway and compromises the vast majority of the coagulation cascade. Warfarin inhibits vitamin-K dependent cofactors (II, VII, IV, X) in addition to protein C and protein S. Warfarin acts across both the extrinsic and intrinsic pathways to prevent thrombus formation. Direct thrombin inhibitors and Factor Xa inhibitors both work in the common pathway of coagulation.

Most DTIs are administered parenterally and used in patients with ACS and in treatment of patients with heparin-induced thrombocytopenia. Oral DTIs have been slower to reach the market. While several are under development, dabigatran received FDA approval in 2010 for prevention of stroke in patients with nonvalvular AF. The RE-LY trial, published in 2009,showed that low-dose dabigatran (110 mg twice daily) was noninferior to warfarin for preventing stroke in patients with intermediate risk nonvalvular AF (mean CHADS2 score 2.1). High-dose dabigatran (150 mg twice daily) was superior to warfarin in this population. The risk for major bleeding (compared with warfarin) was lower in the low-dose dabigatran group and similar in the high-dose dabigatran group. In 2013, the RELY-ABLE study, which extended the follow-up period (median of 4+ years) for patients in the RELY trial, showed similar rates of stroke and death in both groups of dabigatran. In another recent analysis of the RE-LY population, in which cardiovascular endpoints were weighted, researchers concluded that both doses of dabigatran have comparable benefits in prevention of stroke when taking into account safety and efficacy.

The bottom line is that dabigatran is efficacious for stroke prevention in nonvalvular AF patients. Not having to monitor one’s diet or monitor INR are practical advantages that dabigatran offers over warfarin. The inability to readily reverse bleeding associated with dabigatran should be a point of discussion with patients when choosing the appropriate anti-coagulation strategy (especially in elders or patients with histories of bleeding), however a dabigatran antidote will likely be available in the future.

Turning towards venous thromboembolism, dabigatran has been investigated for treatment of acute VTE and also for prevention of recurrent VTE. In both scenarios, dabigatran has shown positive results. In the RE-COVER study, published in 2009, patients with acute VTE were bridged with either warfarin or dabigatran (150 mg twice daily). No significant difference was found in the rates of recurrent VTE or major bleeding. Further trials (RE-MEDY and RE-SONATE) evaluated dabigatran versus warfarin or placebo in patients who completed at least 3 months of treatment for acute VTE. The results showed dabigatran treated patients had similar rates of recurrent VTE (and lower rates of major bleeding) than those on warfarin. Interestingly, a higher incidence of ACS was seen in the dabigatran-treated group. Less surprisingly, when compared with placebo, dabigatran treated patients had a lower rate of recurrent VTE (and higher rates of bleeding). As of now, dabigatran has not been FDA approved for treatment or prophylaxis of acute venous thromboembolism but is being used off-label for these indications by some clinicians.

To date, dabigatran is the sole oral DTI available in the U.S. and, as such, controls the majority market share in this class of medications. I anticipate newer generations of DTIs in the coming years. Stay tuned for the next post that will address Factor Xa inhibitors and their role in the treatment of atrial fibrillation and venous thromboembolism.

September 3rd, 2013

Benefits and Perils of Following the Literature Too Closely

Paul Bergl, M.D.

Don’t fall too far behind in the medical literature, but perhaps a little less vigilance is better for you.

As a resident, probably the most common piece of feedback one receives is, “Read more and expand your clinical knowledge base.” This critique is a standard and generic piece of feedback to encourage the younger generation to never quit in the endless pursuit of knowledge. As our erudite attendings know, medical knowledge always evolves and often reverses course. Thus, the trainee is reminded to establish the habit of keeping up on the literature early in his or her career.

Indeed there is merit to following the literature vigilantly. This past month, Vinay Prasad et al. published on “medical reversals” in the Mayo Clinic Proceedings journal. In their analysis, Prasad et al. reviewed a decade’s worth of original articles in the New England Journal of Medicine. The authors found that more than 100 original articles had overturned previous guidelines or accepted practices. Notable examples — some of which are now old news — included the following:

- The standard teaching of CPR with rescue breaths was reversed by well-designed trials showing that adequate compressions were the main objective in CPR.

- KDOQI guidelines in 2000 were updated to reflect a target hemoglobin between 11 and 13 g/dL in patients with CKD. These recommendations were subsequently refuted on the basis of randomized control trial of epoetin alfa that demonstrated no major benefits and increased risk.

- Rosiglitazone was introduced in 1999 as a treatment for type 2 diabetes mellitus based on its ability to lower hemoglobin A1c. However, a meta-analysis in the New England Journal of Medicine later concluded this drug was associated significantly with cardiovascular death.

The list goes on and can be found in the article’s supplementary materials. If you’re inspired by Prasad’s findings, you ought to make every effort to “read more” and “keep current.”

Then again, maybe better advice for trainees would be, “Read when you can, but don’t worry if you end up falling a few years behind in the literature.” Sure, a revolutionary medical practice might arise, but even residents are unlikely to be so tuned out to the world that they won’t hear about the latest breakthrough somewhere. New treatments will surface; new drugs will be manufactured. The trainee may want to see a novel therapy survive a few years of validation in real clinical practice before stepping out onto a limb of uncertainty. If one aggressively tracks every advance in medicine, one also runs the risk of adopting a practice that later proves more harmful than anticipated.

At least one recently published article speaks in favor of this more lax approach toward the literature. As Paul Mueller highlighted in a NEJM Journal Watch article this past week, many meta-analyses add very little to the growing body of medical literature except growth of the body itself. If you haven’t read a meta-analysis on the pharmacotherapeutic options for fibromyalgia or supplements to prevent colon cancer in the last handful of years, don’t worry: The article you read in 2009 had most of the same studies. A more rational approach might be to pull meta-analyses on an as-needed basis rather trying to stay ahead of the barrage of articles published weekly.

So what’s the best strategy for a trainee? I personally favor a “headlines, tweets, and abstracts” approach — not only for the time-strapped resident but for myself too. Skim a few journals each month, and subscribe to essential Twitter and RSS feeds. You might hit the right balance of staying current and staying above the fray.

August 28th, 2013

Vaccination Against Pertussis – Is It Worth the Trouble?

Paul Bergl, M.D.

According to a study in the BMJ, the pertussis vaccine appears to be 53% effective against clinical infection in a “real world” setting.

“Four out of four!” exclaimed a proud PGY1 as she handed me the billing sheet for her last patient in continuity clinic.

“Four out of four?” asked I.

“Yes, I gave all of my patients their updated Tdap today,” she boasted.

As her preceptor, I commended her for her commitment to routine health maintenance — you know, the supposedly routine tasks that, instead, routinely get pushed to the bottom of the patient’s problem list. I remarked that I had only administered a total of four Tdap vaccines in my 3 years in resident clinic.

Of course, I was exaggerating, but I admit that immunizations often got lost in the shuffle of resident continuity clinic. After an exasperating primary care encounter, the last thing I wanted to hear from my preceptor was, “Has she had her tetanus shot?” And I could imagine few things more defeating than quibbling with a patient over whether flu vaccines cause the flu, pneumococcal vaccines actually reduce the risk for pneumonia, and so on.

With so many competing priorities, so many new patients, and so many old dictations to scan — maybe someone had already vaccinated Mrs. Jones and actually documented it! — I often felt that the burden of vaccinations was too much to bear. But should I be chided for my negligence?

This week Paul Mueller of NEJM Journal Watch reported that the pertussis vaccine is only moderately effective in adults. The data derive from a large case-control study of patients under the care of the Kaiser Permanente group in northern California. The vaccine was reported as “53% effective” against clinical pertussis infection.

I actually pulled the BMJ article, and a few things stuck out:

-

While the vaccine indeed reduced the odds of contracting pertussis, the majority of cases that did occur were not terribly severe. Only a total of 1.2% of patients with PCR-positive tests actually needed to be hospitalized; rates of hospitalization were not reported for PCR-negative controls.

-

Most patients in the study had been vaccinated in the past 2 years. If immunity wanes over time, then 53% effective may be about as good as we will see in clinical practice.

-

Effectiveness, the statistic of interest, is a measure of 1 minus the odds ratio. If one looks at the odds ratio, the statistics are a little less impressive.

I now feel a little more justified in my previous approach toward vaccines but am left torn over what to teach residents that I precept. If a patient has a short agenda and a clean bill of health, then the primary care provider has no excuses. However, if one spends 30 minutes counseling on smoking cessation, working toward better glycemic control, or advising colonoscopies, why worry about the Tdap?

Then again, perhaps the proud intern knew best. After all, this study confirms that pertussis vaccinations indeed are effective. If clinicians take perspective of our public health colleagues, then we ought to work to preserve herd immunity and protect the most vulnerable populations.

August 23rd, 2013

Teaching Ultrasound to Internal Medicine Residents

Akhil Narang, M.D.

Welcome, everyone! I am thrilled at the prospect of sharing with you my thoughts on issues that pertain to residency training during the current academic year.

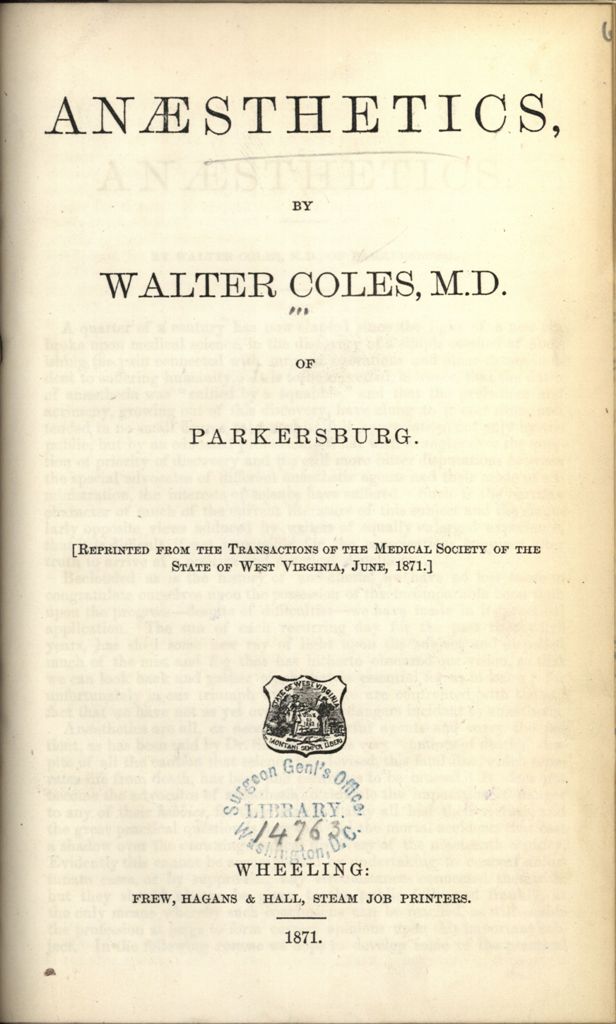

I recently attended the Fundamentals of Critical Care Ultrasound course hosted by the Society for Critical Care Medicine (SCCM). It was well attended by a mix of intensivits, anesthesiologists, and emergency medicine physicians all looking to further their practices with ultrasound skills that can be utilized on a daily basis. In two days, we gained a working ability to evaluate basic cardiac function, identify significant pericardial and pleural effusions, recognize ultrasound signs associated with a pneumothorax, perform a DVT screening exam, assess volume status, and obtain vascular access.

Informally, I polled a handful of the faculty on whether they teach their residents these skill sets. While the emergency medicine and anesthesiology faculty generally incorporated routine use of ultrasound in their teaching, few internal medicine faculty said that they do. Increasingly, internal medicine programs integrate technology in the form of iPads and smart phones into their curriculums, yet few programs teach ultrasound.

During the evenings/nights when attendings and fellows are typically away from the hospital, resident interpretations of chest radiographs, CT scans, and EKGs have are routine elements used to aid clinical decision-making. Similarly, ultrasound can and should be used in a variety of settings as an extension of the physical exam.

A needs assessment1 of medical students and internal medicine residents conducted in 2010 showed a clear desire to integrate ultrasound training into established curriculums. The natural question asked: is it feasible to teach internal medicine residents basic ultrasound skills that are readily applied to clinical care? Without hesitation, I would answer yes!

While a growing body of evidence in the emergency medicine and even medical school curriculum literature highlight successful implementation of an ultrasound curriculum, less has been published with respect to internal medicine residency programs. Several small studies have shown the effectiveness of ultrasound simulation modules2, resident-driven assessment of cardiovascular function using ultrasound3, and heightened procedural confidence with ultrasound4.

While our residents at the University of Chicago have been using ultrasound for years to aid in procedures, we have not utilized a consistent curriculum. The brainchild of a former Chief Resident, Dr. James Town, we are in the midst of creating a standardized ultrasound curriculum for the internal medicine residency program. The curriculum provides formalized instruction on easy-to-acquire skills: using ultrasound for central venous access, measurement of IVC and IJ diameters for volume assessment, and DVT screening. Planned elements for the next phase of education will include ultrasound use for thoracentesis/paracentesis, evaluation of cardiac function, and identification of a pneumothorax.

Starting this year, we conducted formal ultrasound training sessions for nearly all of our housestaff. Following a pre-test and introductory lecture, interns and residents practiced ultrasound skills in groups of three or four on live models with the aid of a faculty member.

We also plan to host weekly “office hours” whereby trained faculty members in pulmonary/critical care and cardiology will be available on the wards to review images/clips that residents save onto USB drives. In addition, we are developing an ultrasound library of collected images/clips that can be used for future teaching and training.

Ultimately our goal is to create a proficiency list of ultrasound skills each resident must accomplish by the time he or she graduates. While it might take years before the American Board of Internal Medicine mandates residents have competency in ultrasound use, there is no question that ultrasound will increasingly find its way into internal medicine graduate medical education. Better to start sooner rather than later!

- J Clin Ultrasound 2010 Oct; 38:401.

- BMC Med Educ 2011 Sep 28; 11:75.

- J Hosp Med 2012 Sep; 7:537.

- J Grad Med Educ 2012 Jun; 4:170.